Recently in SEO Category

Would you live in a house like this?

Of course not, because as soon as some great big nasty gust of wind comes along, this quick fix house will be flattened, gone, demolished, destroyed, wiped out...... capiche? Without foundations, walls, ceilings and everything that makes a house it is only a matter of time before it all falls down. So why would you carry out social media without getting the foundations of your site right first? Time and time again people get carried away with the next best thing and forget about the one thing that supports all of your activities, your website!

Of course not, because as soon as some great big nasty gust of wind comes along, this quick fix house will be flattened, gone, demolished, destroyed, wiped out...... capiche? Without foundations, walls, ceilings and everything that makes a house it is only a matter of time before it all falls down. So why would you carry out social media without getting the foundations of your site right first? Time and time again people get carried away with the next best thing and forget about the one thing that supports all of your activities, your website!Search is an ever evolving practice, there is always the 'next best thing' and don't get me wrong I love a little 'tweet tweet' here and a little 'like like' there BUT, I always insist that the basics are in place first. Social media is a great way of generating buzz and noise for your brand, is a great way to syndicate your content to a wider audience and engage with a mass audience. However, it is not consistent; you will have peaks and troughs of traffic and engagement levels.

"We would like a loft extension, a conservatory, a garage, a swimming pool, decking and any other extension" said the home owner to the builder.... "but what about the foundations" .... "nah we would rather have the loft conversion".

So many companies nowadays say "but we have a Facebook page, we have a twitter account, we are on LinkedIn", which is excellent until I look at their site which is not optimised, not user friendly, has no clear call to action and quite frankly no purpose.

Would you live in a house like this?

NO - because getting builders in to come and lay the foundations or add plumbing and wiring to make this monstrosity stable would cost a fortune - do it right at the beginning and all of these add on social media activities will be much more beneficial.

NO - because getting builders in to come and lay the foundations or add plumbing and wiring to make this monstrosity stable would cost a fortune - do it right at the beginning and all of these add on social media activities will be much more beneficial.Whether it is now or a year down the line when you have to stop everything and redo- your site, you will realise that the next best thing is not always the best thing for your company. Often we have started a social media strategy only to stop and work on the site first before re-launching the campaign. Why send people to a shoddy site when you can create a great site which supports all of your activity.

Would you live in a house like this?

I know I would.....a sturdy, strong house which would stand the test of time, which you could build upon and grow? .......who wouldn't??!!!

Your site is where you can convert your audience

Your site is where you can compete with your competitors

Your site is where you can talk to your audience in more than 140 characters

Your site is where you can increase your visibility on the search engines for non brand terms.

Get the basics right first, then build on your strategy. Make sure your site is:

- Optimised on and off page

- Technically sound, in terms of navigation and crawlabilty

- Offering appealing content, through copy or resources.

- Offering a clear call to action

Get all of these things right and then build on your activities. This way your site will improve in search engine visibility for target keywords, your users will be able to navigate through your site and find relevant content and you will have new fresh content to promote via your social media activities.

Sites we love

- Casinos Not On Gamstop

- Non Gamstop Casinos

- Casinos Not On Gamstop

- UK Betting Sites

- Casino Sites Not On Gamstop

- Best Non Gamstop Casinos UK 2025

- Non Gamstop Casino UK

- Best Non Gamstop Casinos

- Non Gamstop Casinos UK

- Non Gamstop Casinos

- Casino Sites Not Blocked By Gamstop

- Non Gamstop Casinos UK

- Casino Not On Gamstop

- Non Gamstop Casinos

- Slot Sites UK

- Non Gamstop Casino Sites UK

- Casino Sites UK Not On Gamstop

- UK Casinos Not On Gamstop

- Non Gamstop Casino

- UK Online Casinos Not On Gamstop

- New Non Gamstop Casinos Uk

- UK Casino Not On Gamstop

No Comments

Google AdWords will crawl your landing page in the same way that it would crawl your website for organic listings, testing content relevancy, coding and load speed. This process is aimed at providing paid-search visitors with the same level of relevancy they could expect from the natural listings.

To quickly explain the process, Google looks at your keyword, Adtext and landing page and compares the relevancy of all three, making an estimation of visitor experience and applying an overall score, known as the "Quality Score."

Google describes the Quality score as follows: "The AdWords system calculates a 'Quality Score' for each of your keywords. It looks at a variety of factors to measure how relevant your keyword is to your ad text and to a user's search query. A keyword's Quality Score updates frequently and is closely related to its performance. In general, a high Quality Score means that your keyword will trigger ads in a higher position and at a lower cost-per-click (CPC)."

Those advertisers that spent ages designing, building and perfecting the look of their landing page at the cost of optimisation and content will find that their keywords receive very low quality scores and as such, high CPC's and less traffic than desired.

For the purposes of this post I will assume you know how to build an optimal PPC campaign and concentrate on the Landing Page optimisation.

Starting at the top and working downwards:

Page Title:

It is recognised that Google generally indexes 65 characters of a page title, and when it comes to AdWords it is beneficial to utilise this space to give the crawler its first indication of what this landing page is about.

Choose keywords from your AdGroup that you favour and construct a page title containing those keywords.

Example: If your keywords and Adtext are focussed on X-Box Consoles and your Landing page content is focussed on selling X-Box Consoles, don't use a Page title like "Bill's Electronics - Selling the latest electrical goods" Get X-Box keywords in there in a natural way (Don't just put a load of keywords in a string, make it a readable title).

Meta Description:

The Meta Description is not seen by AdWords visitors, but it is crawled and may add a tiny bit of weight to relevancy. Make sure that you utilise the 152 characters available, to produce a natural sounding page description continuing the theme of the product and including keywords.

Meta Keywords:

It is widely accepted that Meta Keywords do not carry weight from an SEO angle but again, they get crawled and if they add anything at all to the relevancy of your PPC landing page it is worth the moment of effort it requires. DO NOT keyword stuff here, simply choose some of your short-tail, broader keywords and add a small selection to the Meta keywords tag.

Headers:

<h> tags carry a decent amount of weight when it comes to page relevancy, so make sure that your headlines include a sought after keyword in a natural way that will make sense to visitors.

Text Style Tags:

Tags that embolden your text, such as <b> and <strong> and also <i> or <em> which make text italic, should be used to place emphasis on a selection of keywords. This brings emphasis to the reader but also the crawler. Important: Use this sparingly, relevantly and don't overdo it, the reader is important and placing emphasis on every other word will likely drive them elsewhere.

Image Alt Tags

"Img Alt's" should contain chosen keywords as well. Alt's are often overlooked when optimising a page, placing keywords into "Image Alt's" simply re-enforces the relevancy of the landing page in relation to its keywords.

Code to Text ratio:

The "code to text ratio" of a landing page is very important as Google needs to see that you are presenting enough information to visitors, but also that you are presenting that information in an optimal manner. General consenus is that a "code to text ratio" of around 25% is optimal for crawlers so getting as close to this number as realistically possible will help with optimisation efforts. There are various tools available on the web that will tell you your code to text ratio.

Site Inclusion:

Google Crawlers will probe links from your landing page to make sure you are not simply linking to alternative sites that are not relevant to the landing page. Try to make sure that your top-level navigation is included. A way to do this without distracting users from the content or design features is to add text links below the fold. If you feel that this approach damages your design, links to your homepage, sitemap, privacy policy, terms & conditions and contact us in the footer of the landing page should suffice.

Page Load Speed:

The speed at which your page loads has a significant effect on quality scores. Google is of the belief that clicking through from the search engine results pages (SERP's) should be as quick as flicking a page in a magazine. If your website does not provide this user experience, you are going to get penalised for it.

Use of multiple images and flash movies will slow a page as they take time to load, so make sure you're not going too overboard with your design.

Again, there are various 3rd party tools available to check page load speeds, so check it out and if you're hitting over 3 seconds it may be time to trim your page a little.

Summary

There are many other factors that affect quality score and this guide will not guarantee 10/10 for every keyword, but getting the above right will go a long way to improving the performance of an AdWords campaign.

The quest for high quality scores requires work and attention to detail but creating a quality PPC campaign and an optimal landing page will cover off visitors, leaving you to focus on conversions.

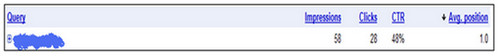

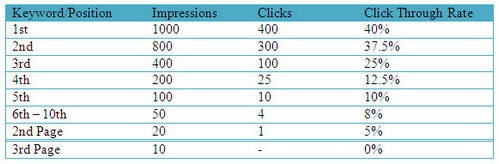

I quickly found out that Google recently released a very exciting new feature in Webmaster Tool that enables us to see the number of impressions and clicks for our most popular keywords, together with the rankings of keywords for a defined period. This was interesting, since it was now possible to analyse how the number of impressions and clicks differed based on the different positions of a website in Google search results. In fact, this new Webmaster Tool feature is quite close to the Click-Through-Rate data provided in Google Adwords.

Ranking Report in Google Webmaster Tool

I was delighted to find such detailed and useful information. However, I quickly realised that what Google might be indirectly telling us is quite exceptional and could drastically change the way the 'search industry' reports on results.

The most interesting thing I discovered is the fact that Google is now showing data on rankings across a range of results: detailed information from position 1 to position 5, and combined data for position 6-10 and then combined data for the 2nd page and 3rd page on the Search Engine Results (SERPs). With the recent advent of Google Personalised Search, rankings are no longer the same for every visitor and never before had there been a good way of tracking the impact of this.

What Google is trying to make us understand here is that a website will no longer have a particular ranking for a specific keyword, but will have a range of rankings determined by various personalisation factors.

So, I believe search experts will now have to analyse, optimise and report on website rankings differently. Whilst in the past an agency or in-house Search Specialist might have reported on exact website rankings based on targeted keywords, reports would make more sense if they now provide the complete range of ranking positions that a website has for a keyword together with its related traffic. Below is an example on how a section of a search report might look:

Google recently came up with its netbook-centric operating system 'Chromium' but at the same time released its source 'openly' to the public. Therefore it is available to anyone to download free and furthermore allowing developers to play around and tweak the system as they like.

I cannot stop thinking that we are going back to the times where the mainframe was actually the computer. Much like Sun's old assumption that the network is the computer. With the release of Chromium, Google is putting more emphasis on the initiative that browser-based applications are the future and is coming up with their first true cloud-based operating system.

Several tests have already been performed by leaders in the information technology industry and results were interesting. One of the main results was that Chromium is a very fast operating system. At a recent press conference Google claimed that Chrome OS had a boot-time of 7 seconds and this isn't an exaggeration at all.

Performance of applications running on Chromium browser essentially comes down to the speed of the Chromium browser. That makes us realise that the new rule of thumb for Google could be 'speed'.

Google certainly have the power to simulate and estimate the amount of time it takes to connect to a page, the way and amount of time a page renders in a browser, and how people react to those times to influence how a page is ranked, classified, and how much of the page is crawled and indexed. This will also include embedded material on a page such as javascript or flash content.

- Avoid overloading a website with images since large files take longer to load.

- Javascript has some advantages over Flash. Flash is overdone and takes longer to load and sometimes makes websites over-complicated. However, it is recommended to place Javascript files in an external file.

- In case videos are hosted on the website, it is better to host the videos on YouTube and provide a link from your website than hosting it directly on the website. YouTube is so big that it has the ability to load videos quickly.

- Avoid "Enter Site" introduction pages, they have a high load-in-time and are so 'old-school'. It's better to let visitors go straight to the information.

- Keep the mark-up simple. Most HTML tags can be styled via an external CSS so there is no need for them to be placed in a nested table for example.

- It is recommended to use XHTML and CSS to start out a website, using tables for layout is not recommended since they can cause a big mess in the mark-up language and finally slows down the loading times. Storing CSS information in an external file keeps your website neat and ensures a fast page-load time.

- Server side compression software are also very useful, they ensure files are at their optimum size prior to being sent to the client browser, this works particularly well for script (e.g. PHP) and CSS files where the focus is not on semantics.

- Images must be optimised at the correct seize/weight. This is important because large image files will take a much longer time to load than a lighter image that has already been processed by an image editing software. However this may implies some compromises on the picture quality.

- Images should ideally be used only for headers or logos and never for large bodies of text. Static text takes only a few bytes as compared to images that consume thousands of bytes.

The new Google race is now opened, is your website fast enough?

Having gone through a series of SEO evaluations those last few weeks, I was shocked to come across so many cases of websites with bad internal linking structure. I now think it's essential to stress on good internal linking since I have the impression that web designers often overlook the importance of having a well structured site in terms of internal linking. The current situation is very sad since a lot of websites are not benefitting of the power of internal linking. I therefore compiled a short list of factors that one should consider while building the website structure.

Good Navigation - The most important issue here is to make sure that the site navigation is correctly spidered by the search engines. We can ensure this by either use of anchor text and text based navigation, or an image-based navigation type with significant 'alt attributes' attached to every image link in the navigation. Avoid Javascript and Flash navigations because they are still not well crawlable and spidered by the search engines. If you still want to keep your 'flashy' navigation then I'll suggest you include an alternative navigation that would be spidered by major search engines. For example, you could have a text based navigation at the bottom of your page, this will help you inner pages be more spiderable.

XML Sitemap - I cannot stress enough on having a good XML sitemap on your website. Sitemaps provide an overview of the site at a single glance but at the same time they help search engines crawl the website. Submitting a XML sitemap to Google Webmaster Tool for example can be very useful since it gives the search engines a concise format that provides spiders with a super-fast blueprint for indexing a website. Furthermore, sitemaps also improve web usability as they are an alternative form of a site specific search, which brings users to the information they need quicker.

Breadcrumbs - I believe breadcrumbs are excellent internal linking tools. Being 'links' by nature, they aid with internal linking and consequently increase the search engine visibility. In addition to anchor text differentiation, breadcrumbs are very useful since they increase the general usability of the website by allowing users to know exactly where they are on the website.

Links in Content - I had the chance to analyse different kind of websites in different industries but it was quite common to see a lack of links in their copy. It's essential to have in-content links, since not only they are more likely to have higher click through rates (increased confidence path), but they are also capable to add more significance to a link because of the neighbouring text. Therefore, the rule of thumb here is to have links with anchor text with targeted keywords in the copy of the website.

Links to Important Pages - It's essential to always ensure that all important pages are well linked to other pages on the website. I sometimes found it amazing how some of the most important pages of a website are not properly linked to other pages. It is better to link them directly to the homepage so that they can benefit from the power of link juice passing from the homepage. But time and again I see websites with important pages buried too deep and ending up with no page-rank at all. And it's not uncommon to find those pages not indexed by the search engines.

Cross-check Robot.txt - This may look stupid, but I came across cases where I found important pages of a website not being crawled and spidered because they were found in a section where the robot.txt was preventing spiders to crawl. This mainly happen by mistake or when new pages are added to the website. Sometimes webmasters tend to forget to go back to their robot.txt and check whether all crawlable/non-crawlable sections are up-to-date. In brief, your important pages need to be findable, if not there's no way they'll get crawled and indexed.

Linking Policy - It is very important to be extremely consistent in your linking behaviour. What I mean is that while linking pages we need to be meticulous about how we are building the links. I once had to re-build the links of a whole website since links to the homepage were very inconsistent. Some links were pointing to the .com page whereas others were pointing to the .com/index.php page. The website also had some major canonicalisation issues where several links were pointing to identical pages but with different URLs. Cases like this actually decrease all the power of internal linking since the link juice is diluted around the site instead of being intelligently focused on the essential pages. In brief a link policy should be setup so that everyone building links knows exactly how and where to link them.

Just to remind, good internal linking ensures that all pages on your website get properly spidered and indexed on search engines. It increases the relevancy of a page to the targeted keyword phrase. Allows proper link juice passing to internal pages hence increasing their page-rank. That's it, hope that this helps tuning and enhancing your internal link structure.

Earlier this month, Seth Godin wrote a blog post entitled 'Lessons from very tiny businesses'. This piece outlined 5 different things we can learn from small businesses, using examples of companies he has encountered. His second point was 'Be micro focused and the search engines will find you'.

Shortly after reading this, I was searching for a carpet cleaning service. I had used one earlier this year, but couldn't remember his number, so I went to his web site. Now bear in mind this is a one-man show, so what you would typically expect is at the most two pages - landing page and contact page. What you get is something else: 9 fully-optimised pages, a blog, and even a Twitter stream!

The thing that impressed me most, however, was the blog, 'My carpet cleaning blog'. Since November 2008, Chris (the carpet cleaner) has been diligently writing up many of his daily jobs as blog posts. Each one is titled with a variation on the phrases 'Carpet cleaning' or 'carpet cleaner', plus the location of the job, either as a postcode (W4, W14) or as the name of the location (Fulham, Wandsworth), and includes some detail on the job in question. In this way he is targeting relevant searches for carpet cleaning all over Greater London. Oh, and he follows these posts up with Tweets as well.

But that's not all. When he came to clean my carpets, Chris also explained how he has managed to get himself placed in Google Local Business ads for not one, but four different postcodes! By asking customers to write reviews, he is managing to come top of the list as well.

Ok, so not everything is rosy with his site from an SEO point of view. URLs need optimising, his blog is one of those 'wysiwyg' ones, and he has literally no incoming links at all. Still, with little technical background and knowledge, Chris has realised the importance of Google as a targeted traffic generator, learnt some of the basic rules of SEO, and applied them assiduously, and with great effect to one set of keyword combinations. Since last November, the site has been appearing on the front page of Google for many local London search related to carpet cleaning, and the number of contacts from his web site has literally doubled!

What's the lesson for me in all this? It's just as Mr Godin says - or as I interpret it anyway: sometimes, as we work on SEO for large organisations in highly competitive markets, we spread ourselves too wide, and look to achieve too much, making it far more difficult to deliver tangible results. Instead we need to identify where we can make a difference, and we need to focus on it. If an inexperienced one man band can do it, we have no excuses.

The Renault UK results page (#7) has matching breadcrumbs on the destination page:

Today, a search for mobility provides the same results with "normal" URLs:

So what does this mean? Should we all style out our sites with Hansel and Gretel in mind? Keeping Google's usability priorities in mind, I think bread crumbs should be a mainstay in any site anyways. Also, I do believe this is a feasible full time change we may see some time in the future.

Displaying breadcrumbs in SERPs clearly maps out for searchers what section of the site their query result is located within; this will enable searchers to better read those URLs and have a clearer idea of whether or not that result is appropriate for their query. Also, if this is a going to be a major SERPs change, it's important the breadcrumbs don't go too deep since as always, there is limited character space.

I'm looking forward to seeing how this alteration plays out - if you see any more examples shoot them to me @ChelseaBlacker or [email protected] .

No Comments

More on the "Vince update" later on and now a brief history of important updates of Google's search algorithm.

The "Florida Update"

On November 16th 2003 Google made a major update on their search algorithm. Named the "Florida update", it had a major effect for a very large number of websites at the time and came to change the course of search engine optimisation.

Aaron Wall from SEObook says: "The Google Florida update was the first update that made SEO complicated enough to where most people could not figure out how to do it. Before that update all you needed to do was buy and/or trade links with your target keyword in the link anchor text, and after enough repetition you stood a good chance of ranking."

Pre-Florida update prominent search engine ranking could be quite easily achieved by doing basic reciprocal link-building, on-page keyword stuffing, and using repetitive inbound anchor text in links.

Post-Florida update a huge number of pages, many of which had ranked at or near the top of the results for a very long time, simply disappeared from the search engine results altogether.

The "rel=nofollow tag Update"

In January 2005 Google contributed to changing the structure of the Internet when Google proposed a link rel=nofollow tag. Originally it was introduced to only stop blog spamming but was shortly afterwards also affecting link buying. In the eyes of Google you are considered a spammer, and risk getting penalised, if you were buying links without using rel=nofollow on them.

In a URL the tag looks like this: <a href="http://www.baseonesearch.co.uk" rel="nofollow">Base One Search</a>

Plenty of prominent websites have adopted the use of the nofollow tag, sites such as Wikipedia, Facebook, Flickr, YouTube and most blog platforms support the tag in the comments section.

"By adding rel="nofollow" to a hyperlink, a page indicates that the destination of that hyperlink SHOULD NOT be afforded any additional weight or ranking by user agents which perform link analysis upon web pages (e.g. search engines)." (http://microformats.org/wiki/rel-nofollow)

The "Universal Search Update"

In May 2007 Google launched their Universal search update. Universal search means that search engine results are blended with selected content from Google's "vertical search databases". The vertical search content is blended directly into the organic search results. Before the "Universal search" update Google gave a list of 10 text-based search engine results.

The "vertical search databases" Google blend into the organic search engine results are: News, Videos, Products, Maps, Images, Books & Blog posts

Today optimising your website for Universal search is important, (e.g. by adding alt-tags and keywords to your images, listing your business of Google Maps, creating videos and optimising title, description, tags etc.), you can increase your chances of achieving prominent search engine rankings.

The "Vince Update"

In October 2008 CEO of Google Eric Schmidt gave a hint of things to come, i.e. the "Vince update". In an interview he talked about "brands", he said:

"The internet is fast becoming a "cesspool" where false information thrives, Google CEO Eric Schmidt said yesterday. Speaking with an audience of magazine executives visiting the Google campus here as part of their annual industry conference, he said their brands were increasingly important signals that content can be trusted." He continued: "Brands are the solution, not the problem," Mr. Schmidt said. "Brands are how you sort out the cesspool." "Brand affinity is clearly hard wired," he said. "It is so fundamental to human existence that it's not going away. It must have a genetic component." (http://adage.com/mediaworks/article?article_id=131569)

The "Vince update" has caused a bit of outcry in the search community because with the update it's believed (and proven) that Google is now favouring brands/corporations for core category keywords. Aaron Wall from SEObook in his blog post proved changes had been made in the search engine results, evidence big brands getting favoured. An example is in mid-January three major US airlines all of a sudden began getting top rankings for "airline tickets" (see below)

(http://www.rankpulse.com/airline-tickets)

Addressing it as a "minor change", Matt Cutts says the change is about factoring trust more into the algorithm for more generic queries rather than pushing major brands to top search engine results.

So does this latest Google "update" - "minor change" mean that big brands/corporations can take a back seat and receive top search engine rankings in Google by default? I think not, the "Vince update" may well be just a minor change. Google is continually tuning its algorithms to give most relevant results for users.

For navigational-type searches (aka research queries, "going through the front door in the shopping centre") such as cars, airline tickets etc. brand/corporation sites are maybe what searchers are looking for? In the above illustrated example, shouldn't there be a couple of airline companies in the results when you search for airline tickets?

No Comments

So what are expiring domain names?

Every day thousands of domain names expire but get bought up and changes ownership before they delete and become readily available again for registration. In the domain name industry the domain name aftermarket of buying expiring domain names is big business. Lots of "domainers" and domain name companies are spending hours upon hours sifting through lists of upcoming expiring domain names.

Nowadays buying expired, or pending delete, domain names has become more of a main-stream thing, even outside domaining circles. It takes an expired domain 30 days before it goes back into the pool of masses and become readily available again to register at any domain name registrar. Within this 30 day time period, between expiring and becoming available again, thousands of domain names exchange hands in what is called the 'domain name aftermarket'.

The life cycle of a domain name

The life cycle of a generic domain name (.com, .net, .org etc) explained by ICANN (Internet Corporation for Assigned Names and Numbers).

(Source: http://www.icann.org/en/registrars/gtld-lifecycle.htm)

Expiring domain names was registered a year or more ago by someone who did not attempt to renew their domain name. Basically, once a domain is expiring it enters into an "Auto Renew Grace Period" (see above). This period usually lasts for 30 days and the owner of the domain is able to renew anytime during that time frame.

Should the owner fail to renew the domain it will enter into the "Redemption period" (see above). In the redemption period the domain name registrar becomes the owner of the domain (the original owner can still come in and renew it), and will try to sell the domain through auctions.

After the pending delete period the domain name is a goner for both the original owner and the registrar. The domain will become readily available at any registrar, as it goes back in to the pool of masses.

Domain name auction houses

Domain auction houses collect expiring domain names from different registrars and hence, have varying catalogues of names to browse. The better known ones and their major affiliate registrars are:

- SnapNames, affiliated with Moniker, MelbourneIT, DirectNIC

- NameJet, affiliated with Network Solutions, eNom

- Afternic, affiliated with Tucows

- Go Daddy, have their own Go Daddy Auctions

They work on different platforms, but what they have in common is that there is an auction and when the auction ends and you are the highest bidder, the domain is yours.

Most popular domain name registrars

The world's top 15 registrars with total domains in millions. (I highly recommend you to check out the link and play around with it, deserves a blog post on its own).

(Source: http://www.registrarstats.com/Public/RegistrarMarketShareMain.aspx)

So why should you care about expiring domain names?

Many expiring domain names hold authority in the eyes of search engines, stemming from the link juice, directory listings, and the age of domain. It is the short cut way to own a site with a reputation. The reputation and authority is carried over to the new owner, it's never voided. Deleted and readily available to register again domain names lose much of their juicy features.

With a freshly registered domain name you have to walk through the dark forest, with expiring domain names you can cruise through the woods on a bike. Buying expiring domain names can give you a domain with existing link juice. It can give you a domain already listed in dmoz and the yahoo directory, it can already have a couple of .edu and/or .gov back links and it can give you a domain with old ripe age.

Always do your research! However, don't expect to find expiring domain names like seo.com. You will come across HEAPS of junk names. Lots of crap is expiring and for that reason lots of expiring domain names deserve to be buried and forgotten. But, there are gems to be found! Make sure you always double check domain name age, PR, back links and so forth.

Best places to buy expiring domains?

SnapNames

http://www.snapnames.com

SnapNames is probably the most prominent domain auction company. SnapNames offers an "In Auction" section that works like any other online auction site (think eBay for the uber geek). They also offer an "Available Soon" section, an auction that can be joined by anyone, but is limited to those who place bids on domains before the start date of an auction. This is good for serious bidders, because it takes out people who may not be serious about the auction process.

SnapNames tries to "snap" expiring domain names from all registrars but, you will be more successful in your buying if you target expiring domain names from registrars exclusively affiliated with SnapNames. Prices start from $59 each and you will only get charged if you win the expiring domain name.

Go Daddy Auctions

https://auctions.godaddy.com

Go Daddy is both a domain name registrar and auction house. They are my personal favourite, mainly because it's the world's biggest domain name registrar, meaning a lot of domain names also expire through them. Unfortunately there are a lot of poor domain names expiring through Go Daddy. There is a $4.99 annual fee to bid on their expiring domain names.

Go Daddy 'Closeout' domains are domains that went through domain name auction already but nobody bid on. Closeouts are sold for a flat fee of just $5 plus an annual registration fee.

Go Daddy Expiring Domains start at $10 plus an annual registration fee but may increase since it is setup as an auction. Expired Names, most of the time, have more valuable names then 'Closeouts' since Go Daddy Auctions makes a domain available first with the expired names auction and if it does not sell then Go Daddy Auctions places it in the 'Closeouts' section.

The best kept secret tools when buying expiring domains!

I am intentionally keeping this section very short, as I don't want to give away everything but I realise I maybe have anyway. You have to learn this yourself....

The first tool I wanted to mention is the "Best Upcoming Auction" tool from DomainTools. My tips here are to use the filters in the right hand menu. Most expiring domains found here are auctioned off on SnapNames. Check it out on: http://www.domaintools.com/advanced-auction/top-picks.html

The second tool is Fresh Drop. I used to spend a lot of hours on this website going through expiring domains and watching/bidding in auctions. This tool is a bit under the radar, even amongst domainers. The Fresh Drop tool is free to use for Go Daddy Auctions, what it does is it scans through upcoming expiring domains (their PRO subscription membership lets you scan SnapNames, Name Jet, Pool etc. as well as Go Daddy)

(expiring domain names filtered on the number of .edu back links)

My tip for Fresh Drop is to have a good look at the column headers, notice that you can filter domain names on their Age, Dmoz, .Edu and .Gov and lots more. I love this! My other tip is to familiarise yourself with the filters on the right hand menu. Check it out on: http://www.freshdrop.net

Enjoy... and I would love to hear your comments about your experience from buying expiring domain names.

The Background

The co-worker is a link building machine - he could be considered an Arnold Schwarzenegger of the link building world, except his phrase is not "I will be back", it is more like "I am not going anywhere"....

Me? Well my strengths lie more in on- page optimisation. This major difference is perhaps where this year- long debate has stemmed from...........

The Arguments

For Content: Whilst acknowledging the co-worker's argument, and the importance of links towards any search marketing effort, I remain in favour of quality, keyword rich content as the more significant element. I am debating for the user whilst the co worker is debating for the search engines. In my opinion, the ultimate objective for any website is to gain user intrigue, navigate throughout the site and hopefully get them to convert.

A website can have a million quality links but, if it has poor or limited content, that is not relevant to the search, it is likely that any user will instantly bounce off the website. What will gain conversions, links or content? And if this is, after all, the objective, should there not then be more importance placed on quality content rather than links? Without great content, link building can prove difficult.....particularly when approaching high authority websites. People are more likely to link to strong, well optimised content with relevance to their own website. Be it a product or a service, quality and interesting content more often than not will have people blogging about it or linking their own websites naturally to yours.

For Links: Without links there is little or no chance of ranking on the first page for any competitive terms. They are vital to off-page optimisation in allowing a website to be found and potentially gain a high volume of traffic. If using logos as links on relevant websites then this also increases online brand awareness, as users will start to recognise the brand. Referring traffic or even direct traffic can increase with this type of link building.

Closing Statement

I put this question to you......User or search engine? Good content MORE important than links? I ask you this: can you, the user, live in a world where websites are revolved around the number of links they have and the importance that Google has placed on these sites, or do you want to create a society where the content is interesting and relevant to your search?

"Let us not despair...

And so, even though we face the difficulties of today and tomorrow, I still have a dream, that one day content will reign over the link weight in the Google algorithm, that content will be able to walk the worldwide web with heads held high.... "

VOTE CONTENT!!!!!